Understanding Algorithmic Bias in AI Systems

G’day, fellow AI enthusiasts and curious minds! As someone knee-deep in the world of artificial intelligence, I’ve found myself on a rollercoaster ride through the fascinating – and sometimes downright alarming – realm of algorithmic bias. Buckle up, because we’re about to dive into this digital quagmire with the enthusiasm of a computer scientist who’s had one too many cups of Earl Grey!

What is Algorithmic Bias?

Picture this: you’re scrolling through your social media feed, minding your own business, when suddenly you’re bombarded with adverts for beard oil and monster truck rallies. Plot twist – you’re a clean-shaven vegetarian who prefers bicycles. Welcome to the world of algorithmic bias, my friends!

In essence, algorithmic bias occurs when an AI system produces results that are systematically prejudiced due to erroneous assumptions in the machine learning process. It’s like that one friend who always jumps to conclusions, but instead of causing awkward dinner party moments, it’s potentially affecting millions of lives.

There are various types of algorithmic bias, including:

- Sample bias: When the data used to train the AI doesn’t represent the population it’s supposed to serve. It’s like trying to understand British cuisine by only eating at Nando’s.

- Prejudice bias: When societal stereotypes creep into the AI’s decision-making process. Imagine an AI casting director that only recommends actors with posh accents for Shakespearean roles!

- Measurement bias: When the data collected is flawed or skewed. This is akin to measuring your height while standing on your tiptoes – not exactly accurate, is it?

Real-world examples of biased AI systems are, unfortunately, not hard to find. In 2018, Amazon scrapped an AI recruiting tool that showed bias against women [1]. The system had been trained on resumes submitted to the company over a 10-year period, most of which came from men. As a result, it penalized resumes that included the word “women’s” and downgraded graduates of women’s colleges.

The Root Causes of Algorithmic Bias

Now, you might be wondering, “How does a supposedly objective system end up being biased?” Well, grab another biscuit and let me enlighten you.

Firstly, biased training data is a major culprit. If an AI is trained on data that reflects historical or societal biases, it’s going to perpetuate those biases. It’s like teaching a parrot using only tabloid headlines – you’re bound to end up with some questionable squawks.

Secondly, flawed algorithm design can introduce bias. If the developers don’t account for potential biases in their models, the AI can end up making unfair decisions. It’s similar to writing a dating app algorithm that only matches people based on their shoe size – not exactly a recipe for lasting love, is it?

Thirdly, a lack of diversity in AI development teams can lead to blind spots. If everyone on the team shares similar backgrounds and experiences, they might miss potential biases that would be obvious to others. It’s like trying to solve a Rubik’s Cube while colourblind – you’re bound to miss some crucial perspectives.

Lastly, historical and societal biases often find their way into our data. As the saying goes, “garbage in, garbage out.” If our society has biases (spoiler alert: it does), those biases will be reflected in our data and, consequently, in our AI systems.

The Impact of Algorithmic Bias

Now, you might be thinking, “So what if an AI thinks I like monster trucks? No harm, no foul, right?” Well, not quite. The impact of algorithmic bias can be far-reaching and downright scary.

For starters, biased AI systems can perpetuate and even amplify existing inequalities. Imagine a biased AI system being used in loan approvals, healthcare decisions, or criminal justice. Suddenly, those harmless monster truck ads don’t seem so innocuous, do they?

A study by researchers at the University of Cambridge found that AI systems used in healthcare can perpetuate existing racial biases, potentially leading to poorer health outcomes for minority groups [2]. It’s like having a doctor who’s brilliant but only read medical textbooks from the 1950s – not exactly ideal for modern, inclusive healthcare.

There are also significant ethical concerns and fairness issues to consider. As AI systems become more prevalent in decision-making processes, we need to ensure they’re not unfairly discriminating against certain groups. It’s a bit like having a robot referee in a football match – we need to make sure it’s not secretly a fan of one team!

Legal and regulatory implications are another kettle of fish altogether. As governments and organizations grapple with the challenges of algorithmic bias, we’re seeing new regulations emerge. The EU’s proposed AI Act, for instance, aims to regulate AI systems based on their potential risk [3]. It’s like trying to create traffic laws for flying cars – we’re in uncharted territory, but we need to start somewhere!

Lastly, algorithmic bias can significantly impact public trust in AI technologies. If people perceive AI systems as biased or unfair, they may be less likely to accept and adopt these technologies. It’s like trying to convince someone to try haggis after they’ve heard all the horror stories – you’ve got an uphill battle on your hands!

Detecting and Measuring Algorithmic Bias

Now that we’ve covered the what, why, and “oh no!” of algorithmic bias, let’s talk about how we can spot these digital ne’er-do-wells.

Auditing AI systems for bias is a crucial step in ensuring fairness. This involves rigorously testing the system with diverse datasets to identify any disparities in outcomes across different groups. It’s like putting your AI through an obstacle course, but instead of physical challenges, it’s facing tests of fairness and equality.

There are various bias detection tools and techniques available, such as the AI Fairness 360 toolkit developed by IBM [4]. These tools can help identify potential biases in datasets and machine learning models. Think of them as the AI equivalent of a metal detector, but instead of finding lost coins on the beach, they’re uncovering hidden biases in your algorithms.

However, detecting bias isn’t always straightforward. Some biases can be subtle and hard to detect, especially when they intersect with complex social factors. It’s like trying to find a needle in a haystack, except the needle is disguised as a piece of hay, and the haystack is the size of London!

Strategies for Mitigating Algorithmic Bias

Fear not, dear reader! All is not lost in the battle against algorithmic bias. There are strategies we can employ to mitigate these digital prejudices.

First and foremost, we need diverse and representative training data. This means ensuring that our datasets reflect the diversity of the populations our AI systems will serve. It’s like creating a playlist for a party – you want to include a variety of genres to cater to everyone’s tastes.

Algorithmic fairness techniques can also help. These include methods like adversarial debiasing, which aims to remove sensitive information from the decision-making process. It’s a bit like blindfolding the AI so it can’t see the characteristics we don’t want it to consider – clever, eh?

Interdisciplinary approaches to AI development are crucial. By bringing together experts from various fields – computer science, ethics, sociology, law, and more – we can create more holistic and fair AI systems. It’s like assembling the Avengers, but instead of fighting Thanos, they’re battling bias!

Ethical guidelines and best practices are also essential. Organizations like the IEEE have developed guidelines for ethically aligned design in AI systems [5]. These serve as a moral compass for AI developers, helping them navigate the murky waters of algorithmic fairness.

The Role of AI Governance and Policy

As we venture further into the AI-driven future, the role of governance and policy becomes increasingly important. It’s like trying to referee a quidditch match – the game is complex, fast-paced, and the rules are still being written!

Current regulations addressing algorithmic bias are still in their infancy. The EU’s General Data Protection Regulation (GDPR) includes provisions related to automated decision-making, but more specific AI regulations are in the works [6]. It’s like watching a legislative game of catch-up, with policymakers sprinting to keep pace with technological advancements.

Global initiatives and collaborations are crucial in addressing algorithmic bias. Organizations like the Global Partnership on AI are working to foster international cooperation on AI governance [7]. It’s a bit like a United Nations for AI – minus the fancy New York headquarters (for now).

Transparency and accountability are key themes in AI governance. We need to ensure that AI systems are explainable and that there are clear mechanisms for redress when things go wrong. It’s like having a customer service department for AI – “Hello, you’ve reached the Department of Algorithmic Accountability. How may we assist you today?”

Future Directions in Combating Algorithmic Bias

As we peer into our crystal ball (or should that be a quantum computer?), what does the future hold for our battle against algorithmic bias?

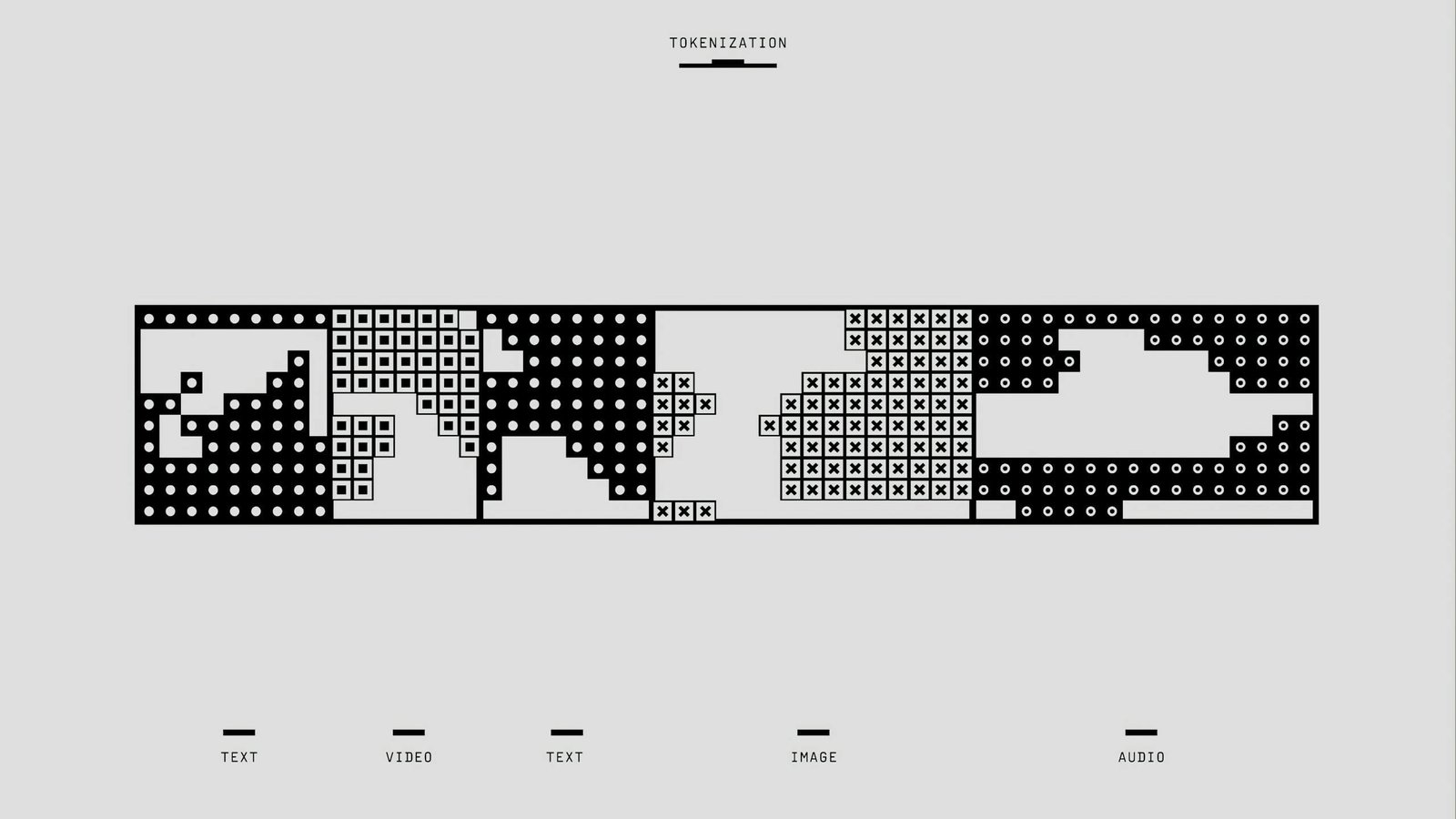

Emerging research and technologies offer hope. For instance, federated learning techniques allow AI models to be trained on distributed datasets without centralizing the data, potentially reducing privacy concerns and certain types of bias [8]. It’s like teaching an AI to bake by sending it to different kitchens around the world, rather than just one fancy Parisian pâtisserie.

Explainable AI (XAI) is another exciting frontier. By making AI decision-making processes more transparent and interpretable, we can better identify and address biases. It’s like giving our AI systems the ability to show their work, just like your maths teacher always insisted!

However, challenges remain. As AI systems become more complex, detecting and mitigating bias becomes increasingly difficult. It’s a bit like playing whack-a-mole, but the moles are invisible and keep changing the rules of the game.

Conclusion

Well, dear reader, we’ve journeyed through the labyrinth of algorithmic bias, armed with nothing but our wits and a healthy dose of British humour. From biased recruitment tools to the promise of explainable AI, we’ve covered quite a bit of ground!

Understanding and addressing algorithmic bias is crucial as AI continues to permeate our lives. It’s not just about fairness – it’s about creating AI systems that truly benefit all of humanity, not just a privileged few.

So, what can you do? Stay informed, ask questions, and don’t be afraid to challenge the AI systems you encounter. After all, a little healthy skepticism never hurt anyone – especially when it comes to algorithms that might be deciding your future!

And remember, the next time you’re puzzled by a peculiar AI-driven recommendation, it might not be skynet trying to manipulate you – it could just be a case of good old-fashioned algorithmic bias. Cheerio!

Want to learn more about AI?

- Check out my article on AI Ethics or Algorithmic Bias to get started.

- Check out my article on Implications of AI in Warfare and Defence.

References

[1] Dastin, J. (2018). Amazon scraps secret AI recruiting tool that showed bias against women. Reuters. Link

[2] Kantamneni, N., et al. (2022). Addressing racial bias in AI healthcare systems. Nature Medicine, 28(6), 1153-1155. Link

[3] European Commission. (2021). Proposal for a Regulation laying down harmonised rules on artificial intelligence. Link

[4] Bellamy, R. K., et al. (2019). AI Fairness 360: An extensible toolkit for detecting and mitigating algorithmic bias. IBM Journal of Research and Development, 63(4/5), 4:1-4:15. Link

[5] IEEE. (2019). Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Autonomous and Intelligent Systems. Link

[6] Goodman, B., & Flaxman, S. (2017). European Union regulations on algorithmic decision-making and a “right to explanation”. AI Magazine, 38(3), 50-57. Link

[7] Global Partnership on AI. (2020). Working groups. Link

[8] Kairouz, P., et al. (2021). Advances and open problems in federated learning. Foundations and Trends in Machine Learning, 14(1–2), 1-210. Link

Avi is a researcher educated at the University of Cambridge, specialising in the intersection of AI Ethics and International Law. Recognised by the United Nations for his work on autonomous systems, he translates technical complexity into actionable global policy. His research provides a strategic bridge between machine learning architecture and international governance.

2 Comments