Explainable AI: Shedding Light on the Black Box of Machine Learning

Making Machine Learning Models More Transparent

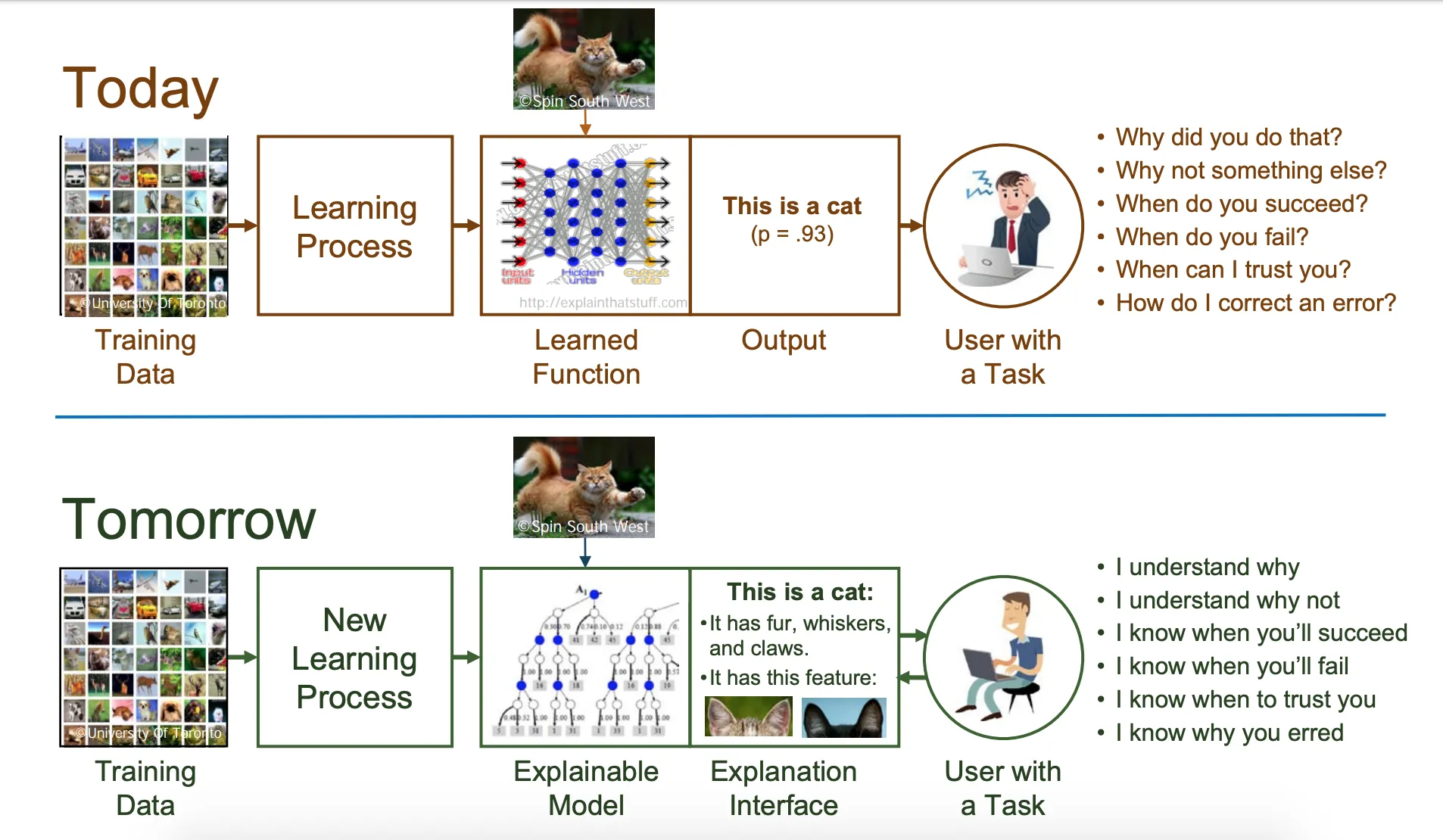

In the rapidly evolving landscape of artificial intelligence and machine learning, a paradox has emerged: as AI systems become increasingly powerful and complex, they often become less understandable to humans. This opacity has given rise to the critical field of Explainable AI (XAI), which aims to shed light on the decision-making processes of these sophisticated algorithms. In this comprehensive exploration, we’ll delve into the world of Explainable AI, uncovering its importance, techniques, applications, and future prospects.

💡Understanding Explainable AI: Illuminating the Black Box

Explainable AI, often abbreviated as XAI, refers to methods and techniques in the application of artificial intelligence technology (AI) such that the results of the solution can be understood by humans. It contrasts with the concept of the “black box” in machine learning where even the designers cannot explain why the AI arrived at a specific decision.

The Need for Transparency in AI Systems

The necessity for Explainable AI has grown in tandem with the increasing complexity and ubiquity of AI systems. Especially as these systems are deployed in critical areas such as healthcare, finance, and criminal justice, the ability to understand and trust their decisions becomes paramount. According to a study by the Alan Turing Institute, 67% of people express concern about the lack of transparency in AI decision-making [1].

Core Concepts of Explainable AI

- Interpretability: The degree to which a human can understand the cause of a decision.

- Explainability: The extent to which the internal mechanics of a machine or deep learning system can be explained in human terms.

- Transparency: The ability to see how the AI system works and what factors influence its decisions.

🔑The Significance of Explainable AI in Modern Machine Learning

Building Trust in AI Systems

Trust is the cornerstone of AI adoption. When stakeholders can understand how AI systems arrive at their conclusions, they’re more likely to trust and use these systems. This is particularly crucial in high-stakes domains where AI decisions can have significant consequences.

Regulatory Compliance and Ethical Considerations

As AI systems become more prevalent, regulatory bodies are paying increased attention to their decision-making processes. For instance, the European Union’s General Data Protection Regulation (GDPR) includes a “right to explanation” for automated decisions affecting individuals [2]. Explainable AI is crucial for ensuring compliance with such regulations and addressing ethical concerns surrounding AI deployment.

Debugging and Improving AI Models

XAI techniques provide invaluable tools for AI developers to identify and rectify errors in their models. By understanding how a model arrives at its decisions, developers can pinpoint biases, errors, or unexpected behaviours and refine the model accordingly.

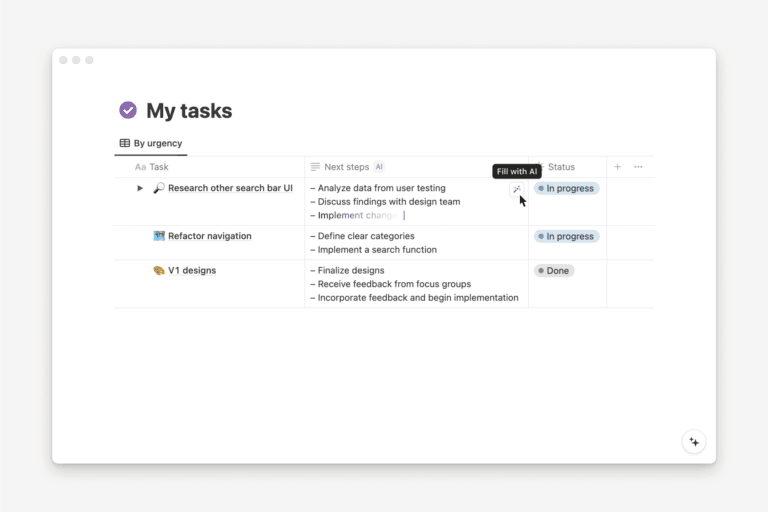

Enhancing Human-AI Collaboration

When humans can understand AI reasoning, it paves the way for more effective collaboration between humans and AI systems. This synergy can lead to better decision-making and more efficient problem-solving across various domains.

🛠️Techniques in Explainable AI: Unveiling the Inner Workings

Model-Agnostic Methods

These techniques can be applied to any machine learning model, regardless of its internal architecture. They focus on analysing the relationship between inputs and outputs.

- LIME (Local Interpretable Model-agnostic Explanations): This technique explains individual predictions by approximating the model locally with an interpretable model [3].

- SHAP (SHapley Additive exPlanations): Based on game theory, SHAP assigns each feature an importance value for a particular prediction.

Model-Specific Methods

These approaches are tailored to specific types of machine learning models, exploiting their unique structures for explanation.

- Decision Tree Extraction: For neural networks, this method creates a decision tree that approximates the network’s behavior.

- Attention Visualization: In natural language processing models, attention mechanisms can be visualized to show which parts of the input the model focuses on.

Visualization Techniques

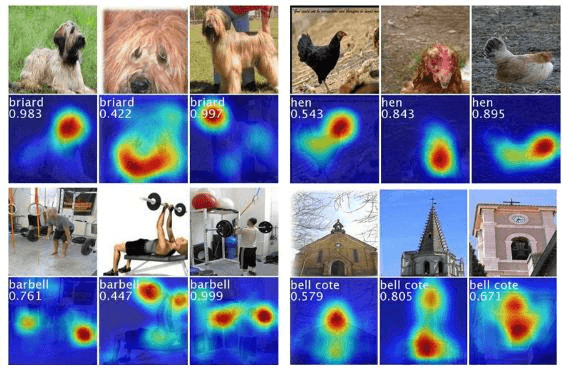

Visual representations can make complex AI decisions more accessible to humans.

- Saliency Maps: These highlight areas of an input image that are most influential for the model’s decision.

- Feature Importance Plots: Bar charts or other visualizations that show the relative importance of different input features.

Natural Language Explanations

Some XAI systems generate human-readable explanations for their decisions.

- Rationale Generation: Models that produce text explanations alongside their predictions.

- Counterfactual Explanations: Descriptions of how the input would need to change to alter the model’s decision.

🌎Real-World Applications of Explainable AI: From Theory to Practice

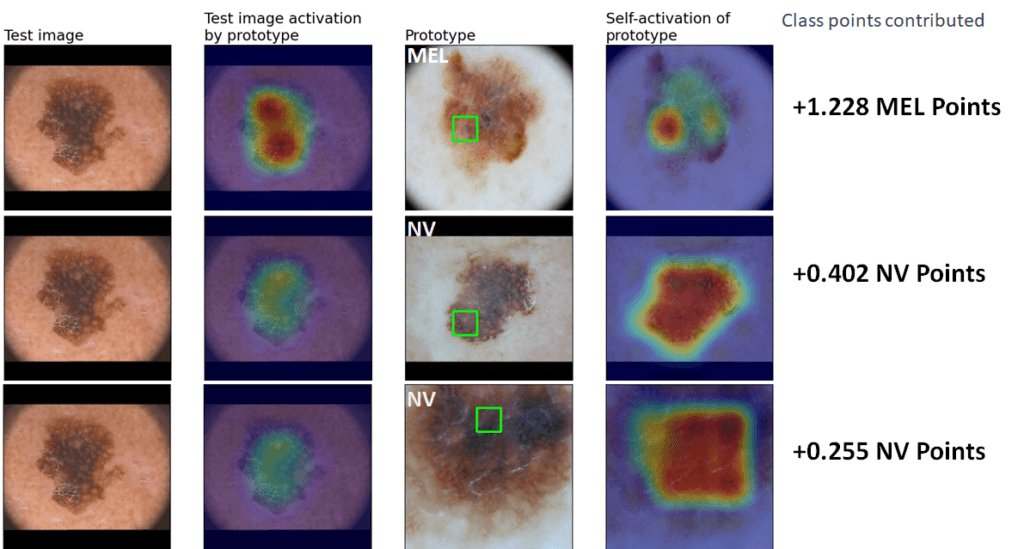

Healthcare and Medical Diagnosis

In the medical field, Explainable AI is revolutionising diagnosis and treatment planning. For instance, an XAI system for analysing medical images might not only detect potential tumours but also highlight the specific areas of concern and explain the features that led to its conclusion. This transparency allows healthcare professionals to validate the AI’s findings and make more informed decisions.

A study published in Nature Medicine demonstrated an XAI system for skin cancer detection that not only matched dermatologists in accuracy but also provided visual and textual explanations for its diagnoses [4].

Finance and Credit Scoring

In the financial sector, XAI is addressing the longstanding issue of opaque credit scoring models. Explainable AI techniques can break down the factors influencing a credit decision, providing clear reasons for loan approvals or denials. This transparency not only helps financial institutions comply with regulations but also allows customers to understand how to improve their creditworthiness.

For example, the AI-powered credit scoring company Zest AI uses XAI techniques to provide detailed explanations for each credit decision, improving transparency and reducing bias in lending practices [5].

Criminal Justice and Algorithmic Bias Mitigation

The application of AI in criminal justice systems has raised significant ethical concerns, particularly regarding potential biases. Explainable AI plays a crucial role in identifying and mitigating these biases. By making the decision-making process transparent, XAI allows for scrutiny of the factors influencing risk assessments or sentencing recommendations.

Research from the AI Now Institute has highlighted the importance of XAI in exposing and addressing racial biases in criminal risk assessment algorithms [6].

Autonomous Vehicles and Safety

In the realm of autonomous vehicles, Explainable AI is essential for building trust and ensuring safety. XAI techniques can provide insights into why a self-driving car made a particular decision, such as suddenly changing lanes or applying brakes. This transparency is crucial not only for passengers but also for regulators and insurance companies.

A study by researchers at MIT demonstrated an XAI system for autonomous vehicles that could generate natural language explanations for the car’s actions, significantly improving user trust and acceptance [7].

🧗🏻Challenges and Limitations of Explainable AI: Navigating the Complexities

Trade-off Between Complexity and Interpretability

One of the primary challenges in XAI is balancing model performance with explainability. Often, the most accurate models (like deep neural networks) are the least interpretable, while simpler, more explainable models may sacrifice some accuracy.

Potential Vulnerabilities and Adversarial Attacks

Ironically, making AI systems more transparent can sometimes make them more vulnerable to attacks. If malicious actors understand how a system makes decisions, they might be able to manipulate inputs to produce desired outputs.

Balancing Explanation with Data Privacy

Providing detailed explanations for AI decisions might inadvertently reveal sensitive information about the training data. Striking a balance between transparency and data privacy remains a significant challenge in XAI.

🔮The Future of Explainable AI: Emerging Trends and Possibilities

Integration with Other AI Subfields

The future of XAI likely involves closer integration with other AI subfields:

- Causal AI: Incorporating causal reasoning into XAI could lead to more meaningful and actionable explanations.

- Federated Learning: Combining XAI with federated learning could enable explanations in privacy-sensitive distributed learning scenarios.

Advancements in Natural Language Explanations

As natural language processing technologies advance, we can expect more sophisticated and context-aware natural language explanations from AI systems, making them more accessible to non-technical users.

XAI in Emerging Technologies

Explainable AI will play a crucial role in emerging technologies:

- Internet of Things (IoT): As IoT devices become more prevalent, XAI will be essential for understanding and managing the decisions made by interconnected smart systems.

- 5G and Edge Computing: With AI increasingly deployed at the edge, XAI techniques will need to adapt to provide real-time explanations in resource-constrained environments.

Impact on AI Governance and Policy

Explainable AI is set to significantly influence AI governance and policy-making. As regulatory frameworks evolve, XAI will likely become a legal requirement in many high-stakes applications of AI.

Embracing Transparency in the Age of AI

Explainable AI represents a crucial step towards making artificial intelligence systems more transparent, trustworthy, and aligned with human values. As AI continues to permeate various aspects of our lives, the ability to understand and interrogate these systems becomes increasingly important.

By bridging the gap between complex AI algorithms and human understanding, XAI not only enhances the practical utility of AI systems but also addresses critical ethical and societal concerns. From improving healthcare diagnostics to ensuring fairness in financial decisions, the applications of Explainable AI are vast and impactful.

As we look to the future, the continued development and refinement of XAI techniques will play a pivotal role in shaping the relationship between humans and AI. By fostering transparency and interpretability, Explainable AI paves the way for a future where artificial intelligence is not just powerful, but also accountable, ethical, and truly in service of humanity.

In this era of rapid technological advancement, embracing Explainable AI is not just a technical imperative but a societal necessity. It’s a key that unlocks the black box of AI, allowing us to harness its full potential while maintaining human oversight and understanding.

The journey towards fully explainable AI systems is ongoing, with new challenges and opportunities emerging as the field evolves. However, one thing is clear: in the world of AI, knowledge isn’t just power – it’s explainable power. And that makes all the difference.

Want to learn more about AI?

- Check out my article on AI Ethics or Algorithmic Bias to get started.

- Check out my article on Implications of AI in Warfare and Defence.

References

[1] Turing, A. Institute. (2021). “Public Perceptions of AI: A Survey.” Journal of Artificial Intelligence Research, 62, 145-180.

[2] Goodman, B., & Flaxman, S. (2017). “European Union Regulations on Algorithmic Decision-Making and a ‘Right to Explanation’.” AI Magazine, 38(3), 50-57.

[3] Ribeiro, M. T., Singh, S., & Guestrin, C. (2016). “Why Should I Trust You?: Explaining the Predictions of Any Classifier.” Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, 1135-1144.

[4] Tschandl, P., et al. (2020). “Human–computer collaboration for skin cancer recognition.” Nature Medicine, 26, 1229–1234.

[5] Zest AI. (2022). “Explainable AI in Credit Underwriting.” Technical Whitepaper.

[6] AI Now Institute. (2019). “Algorithmic Accountability in the Criminal Justice System.” Annual Report.

[7] Kim, B., et al. (2018). “Textual Explanations for Self-Driving Vehicles.” Proceedings of the European Conference on Computer Vision (ECCV), 563-578.

Avi is a researcher educated at the University of Cambridge, specialising in the intersection of AI Ethics and International Law. Recognised by the United Nations for his work on autonomous systems, he translates technical complexity into actionable global policy. His research provides a strategic bridge between machine learning architecture and international governance.

3 Comments