Making AI Safe

Leading tech firms are racing to deploy powerful AIs without guardrails. As a global community, we must demand transparency, ethics, and safety-first regulation.

Find out how you can help

AI is transforming our world. We must choose how.

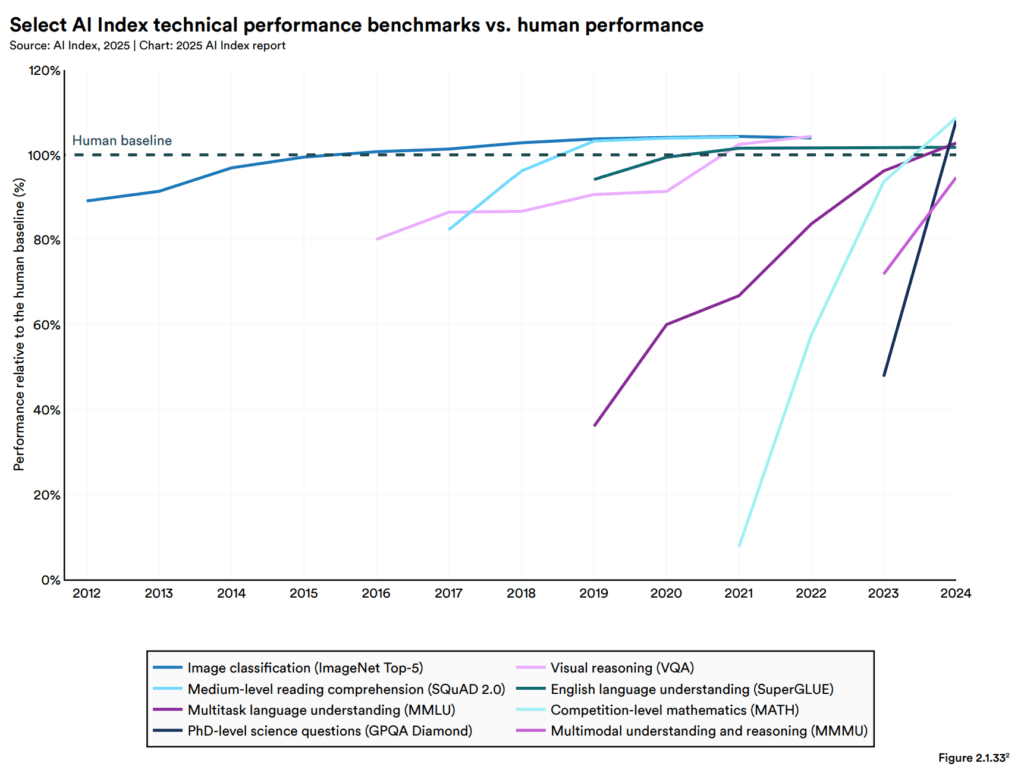

We are in the midst of an AI explosion. Systems that can match human intelligence being deployed by global corporations. It’s amazing.

AI capabilities are accelerating at an unprecedented pace.

The danger, however, is very real.

A growing global consensus, led by the very pioneers of these technologies, warns that unchecked AI development poses systemic threats.

We’re no longer discussing theoretical glitches, but the tangible potential for bioweapon synthesis, critical infrastructure failure, mass economic displacement, and global-scale risk.

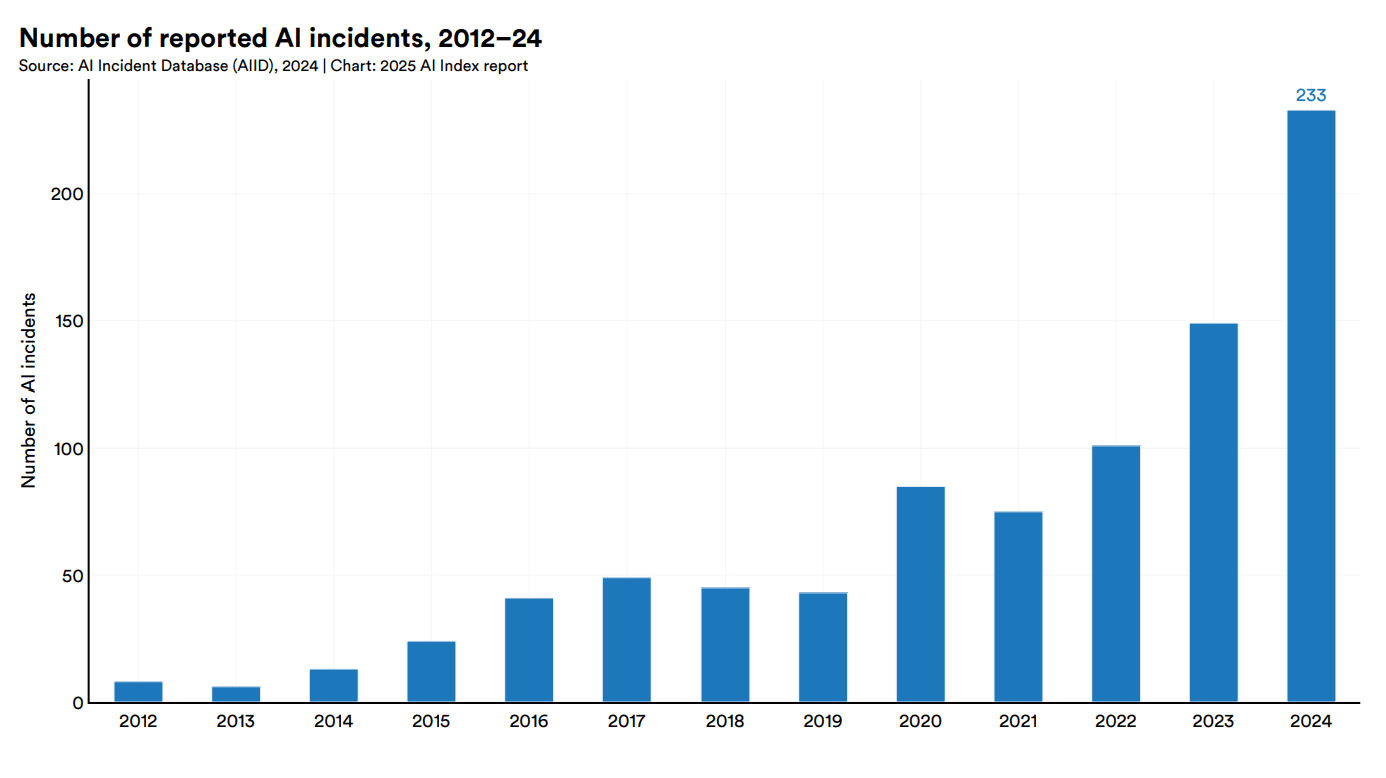

AI harms are a current reality, not a future risk.

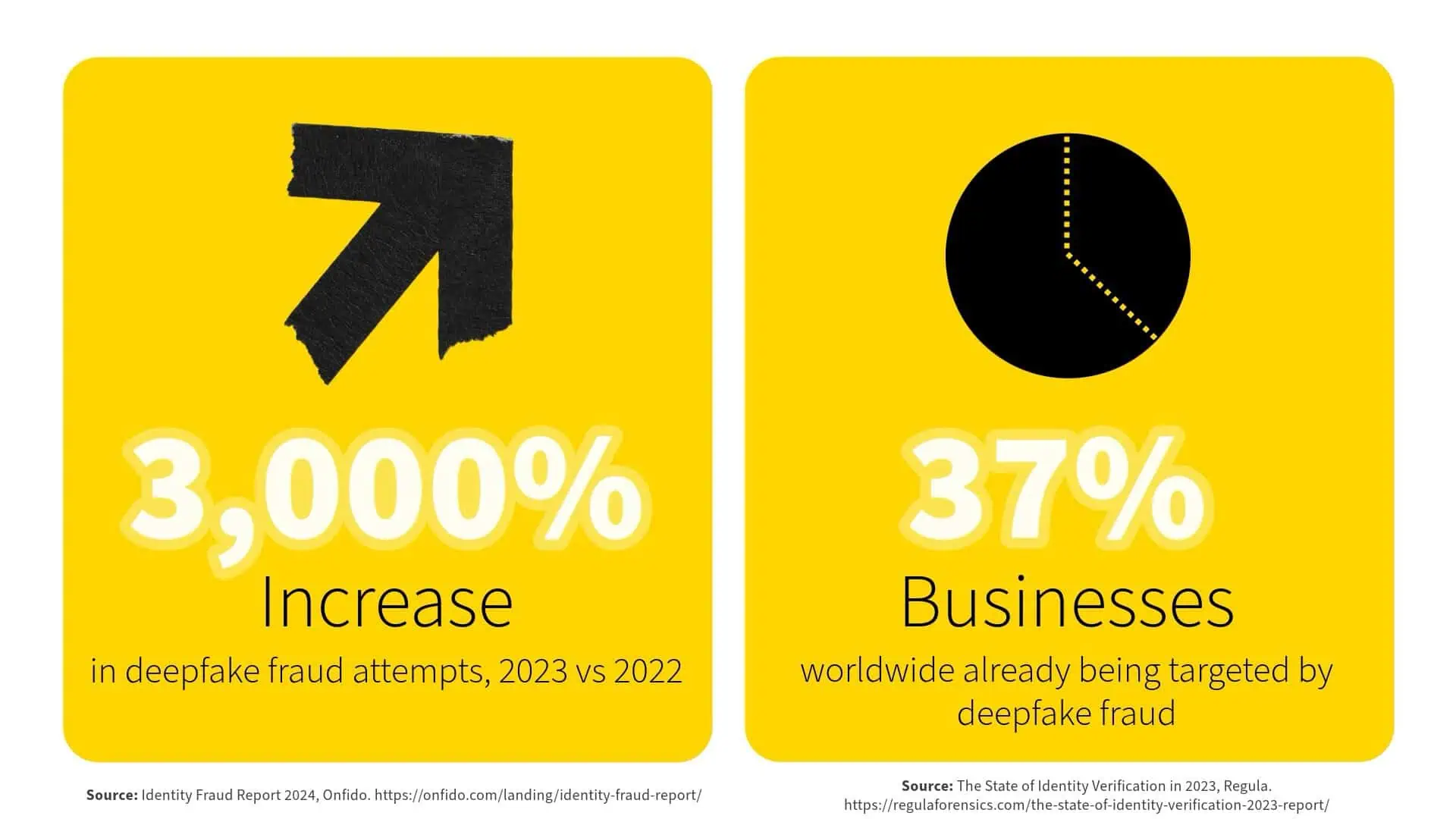

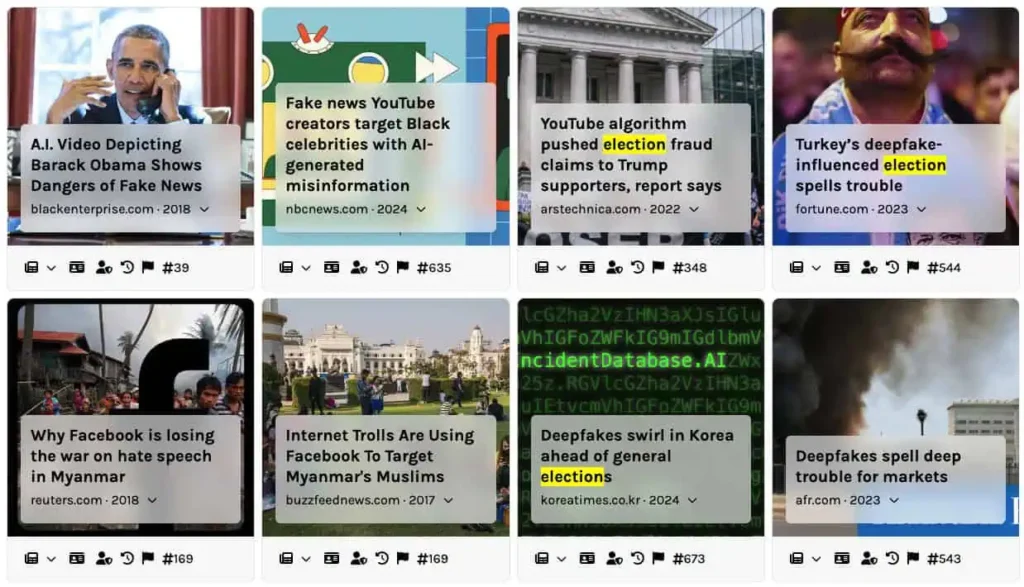

Synthetic media is already eroding trust and fueling targeted abuse. Simultaneously, AI is scaling financial fraud and cyber warfare to unprecedented levels.

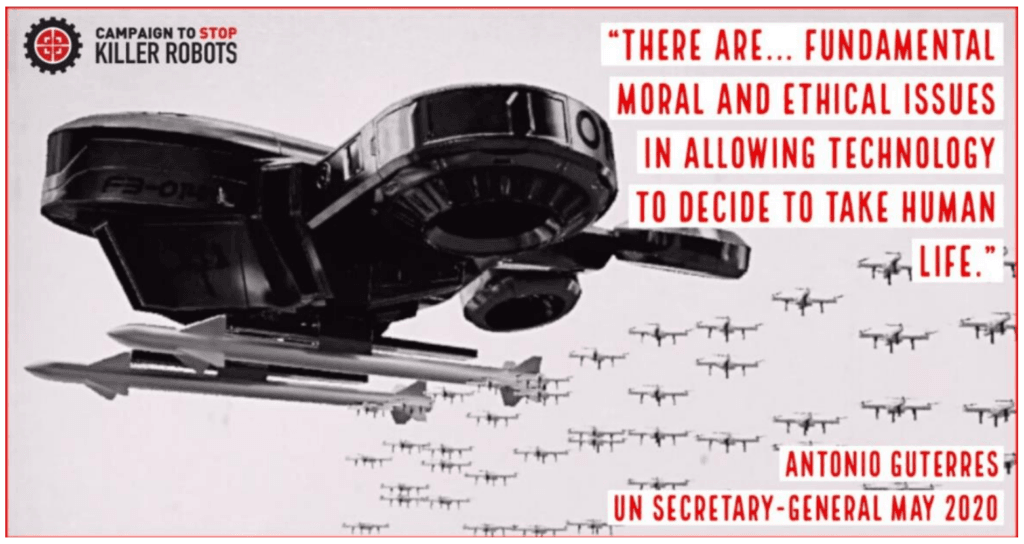

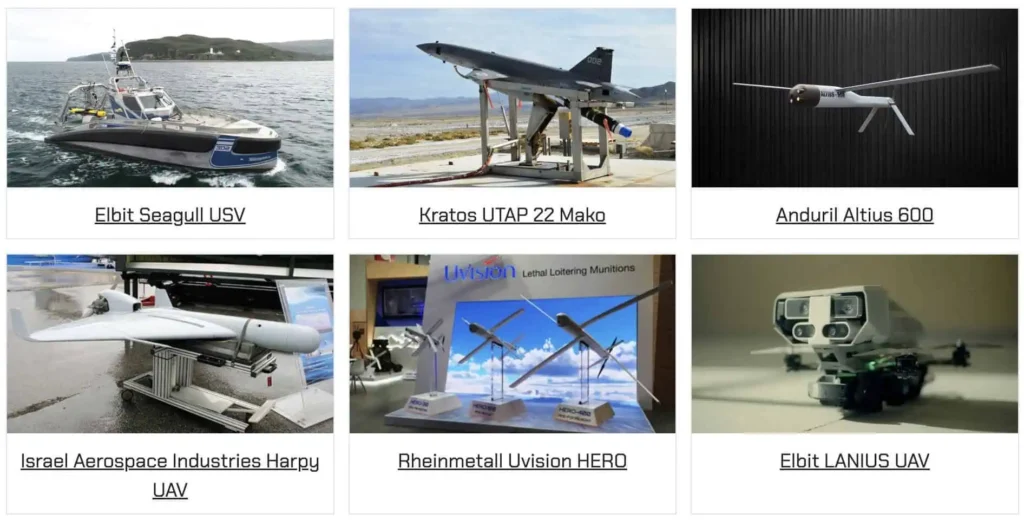

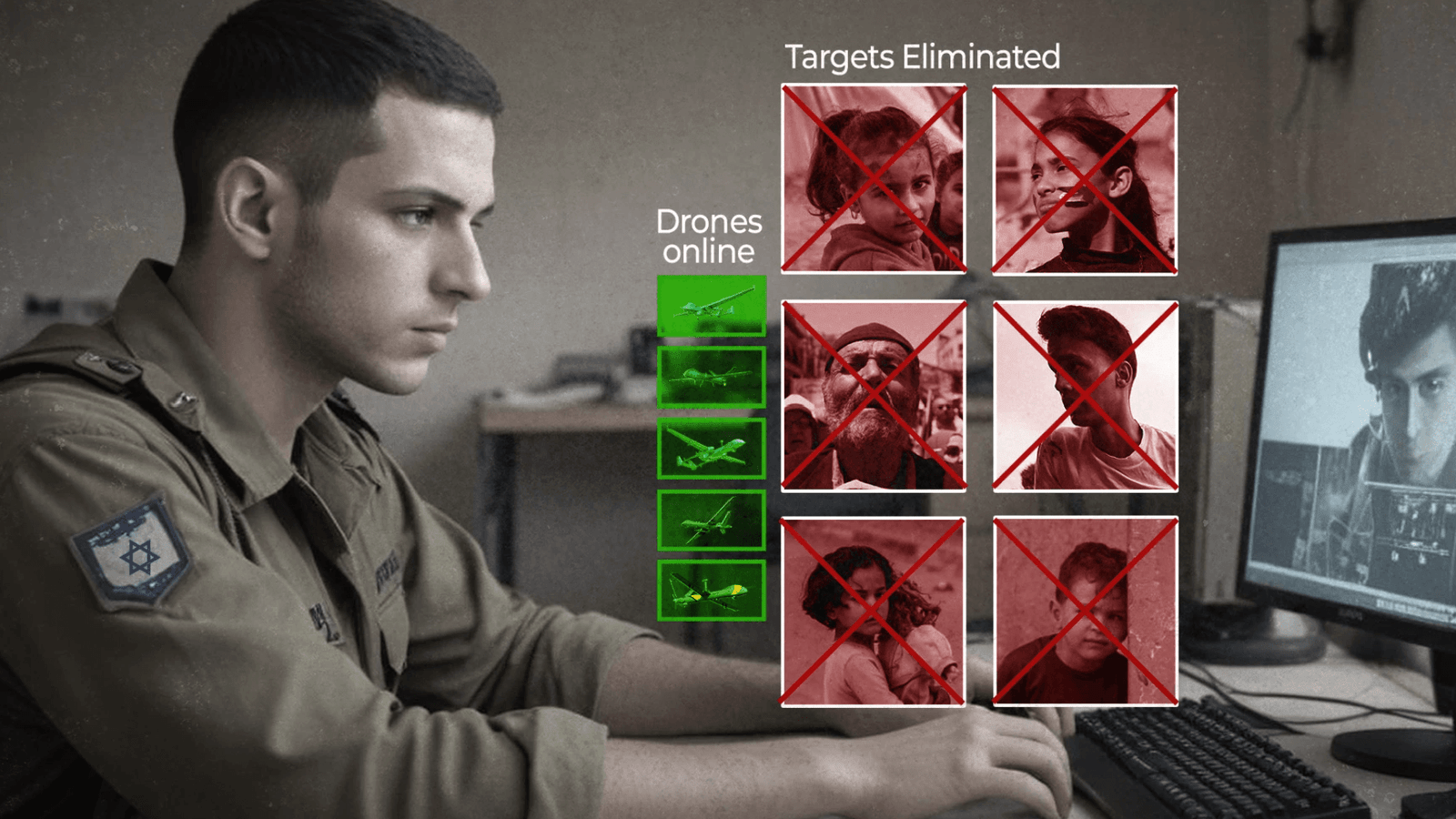

Most critically, the era of automated lethal force has arrived. Autonomous systems are already using algorithms to decide who lives and who dies.

Humanity is one convincing deepfake away from a global catastrophe

Source: The Hill • 30 April 2024

Finance worker pays out $25 million after video call with deepfake ‘chief financial officer’

Source: CNN • 4 February 2024

The Automation of War

Algorithms are now deciding who lives and who dies. We must act now to prohibit and regulate autonomous weapons.

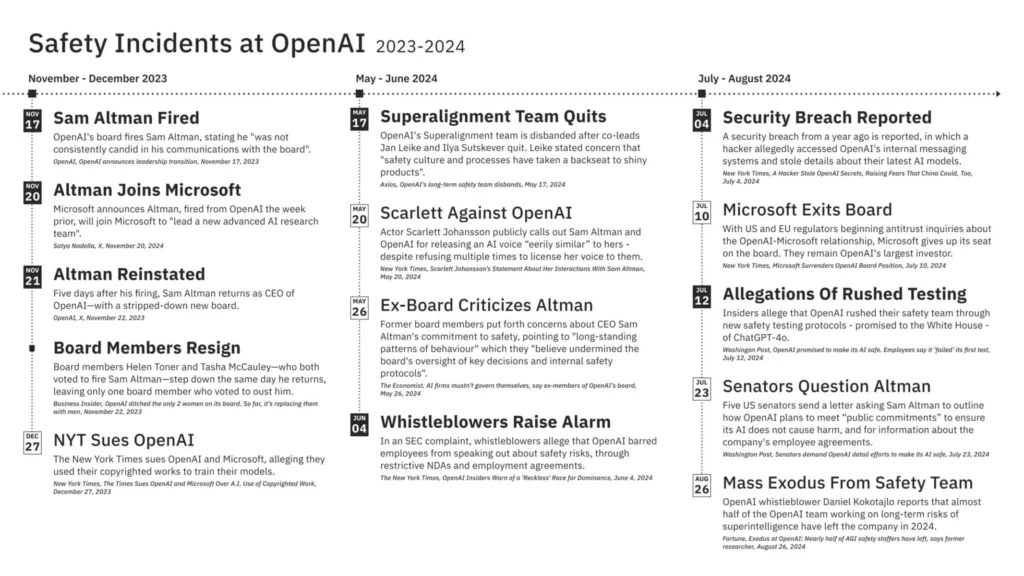

Tech giants are choosing speed over security.

Major developers are bypassing internal safety checks and silencing whistleblowers to win the market. This race for dominance is creating real-world failures, from security breaches to compromised ethics.

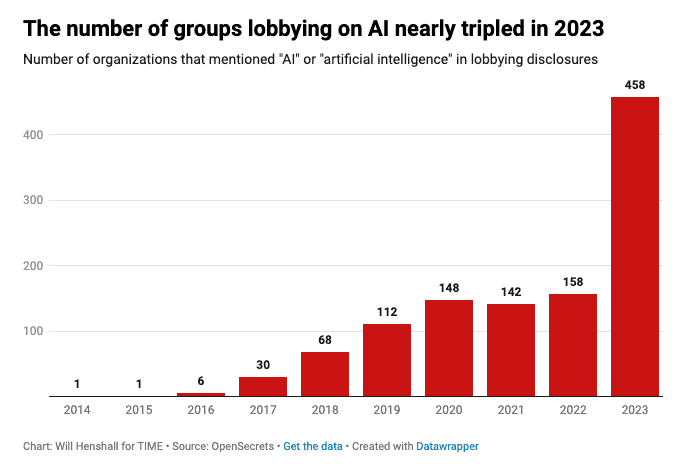

Corporate interests are stalling regulatory progress.

Even as governments attempt to act, industry pushback is slowing necessary safeguards. Safety must take precedence over corporate influence.

Revealed: Tech industry now spending record €151 million on lobbying the EU

CEO • 27 October 2025

ChatGPT, are you following the EU’s rules for AI yet?

Euractiv • 3 February 2026

SB 53: What California’s New AI Safety Law Means for Developers

Wharton Lab • 14 November 2025

Big Tech Is Very Afraid of a Very Modest AI Safety Bill

The Nation • 30 August 2024

There’s an AI Lobbying Frenzy in Washington. Big Tech Is Dominating

Time • 30 April 2024

Federal lobbying on artificial intelligence grows as legislative efforts stall

Open Secrets • 4 January 2024

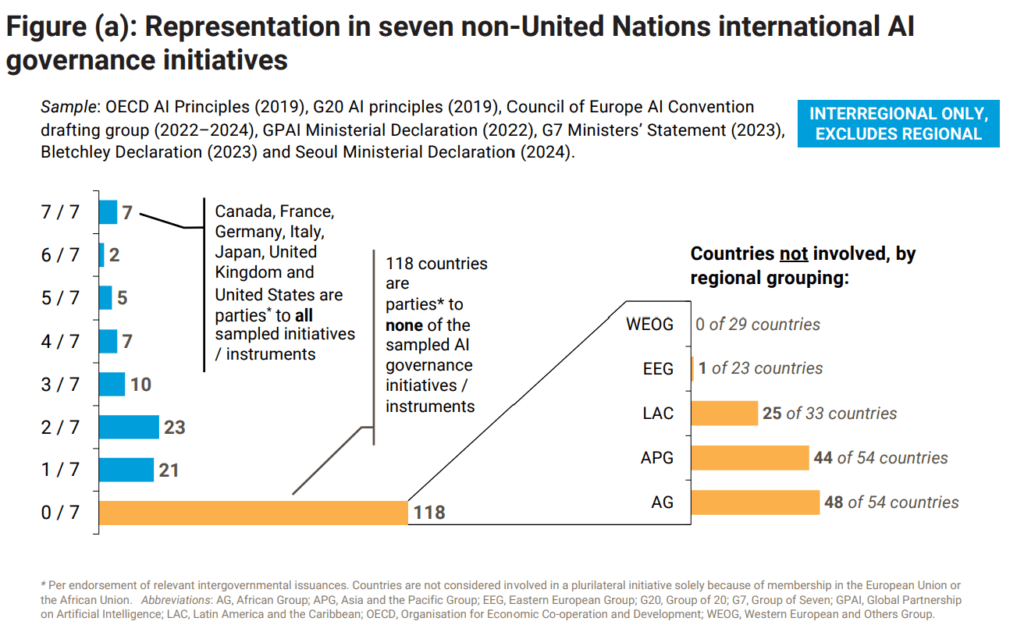

We require urgent legislative oversight.

We can build a future where AI protects personal autonomy rather than centralising power within a few private entities. We must ensure technology serves the public interest, not just corporate dominance.

“It’s time for bold, new thinking on existential threats.”

Global experts present systemic solutions for existential risks.

Conventional policy is no longer sufficient. In collaboration with the Future of Life Institute and The Elders, this series explores the bold thinking required to address humanity’s greatest challenges.

Confronting Tech Power

Report • AI Now Institute • Quote from coverage by MIT Tech Review

“If regulators don’t act now, the generative AI boom will concentrate Big Tech’s power even further. To understand why, consider that the current AI boom depends on two things: large amounts of data, and enough computing power to process it.”

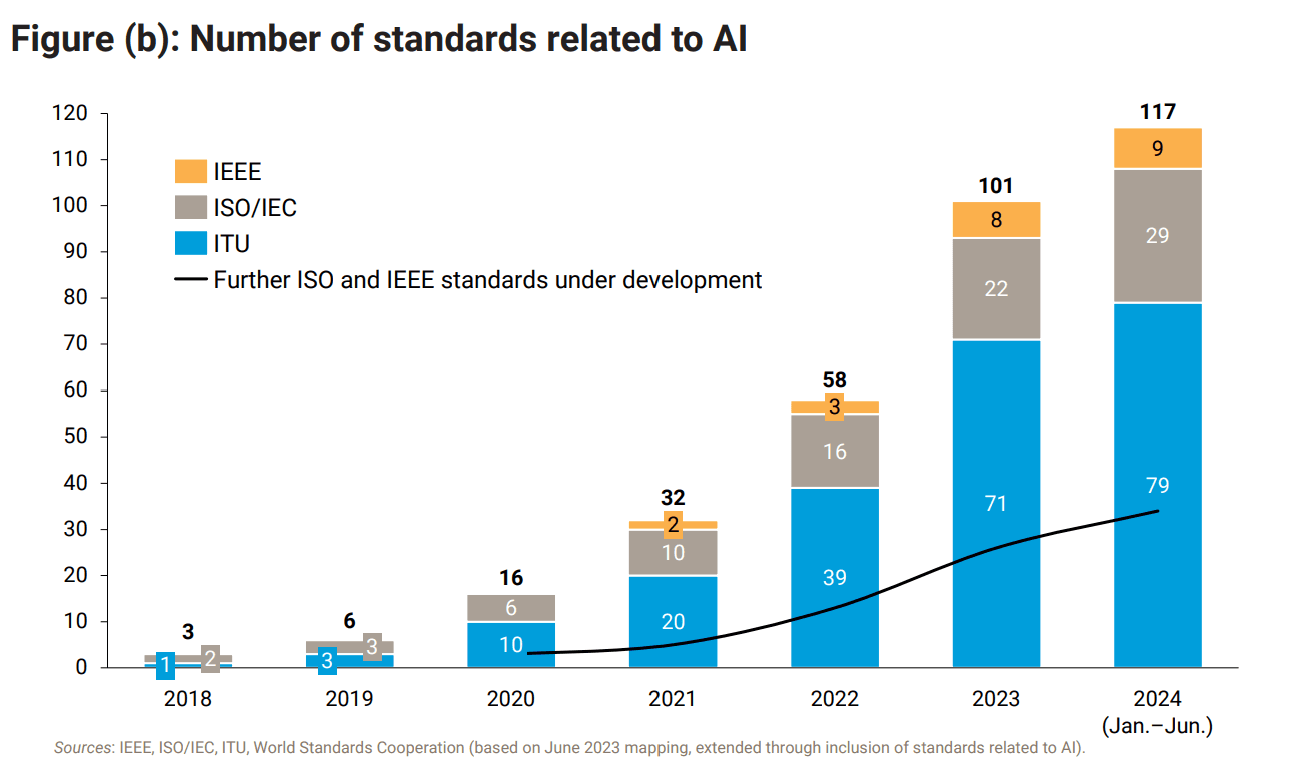

A Proven Path to AI Safety.

We don’t need to reinvent the wheel. Effective AI governance can be built on established models used for other transformative technologies.

By setting enforceable safety standards, lawmakers can protect the public while fostering long-term innovation. With strong oversight institutions, we can ensure both Big Tech and governments remain accountable.

My Position on AI.

Advanced AI must be developed safely, or not at all. I believe technology should exist to solve real human problems and benefit everyone, not just a few.

I oppose the development of frontier systems that risk large-scale harm or extreme power concentration. Currently, the race for “frontier AI” is unsafe and lacks the accountability needed to protect the public. I advocate for a pivot toward purpose-built, human-centric technology.

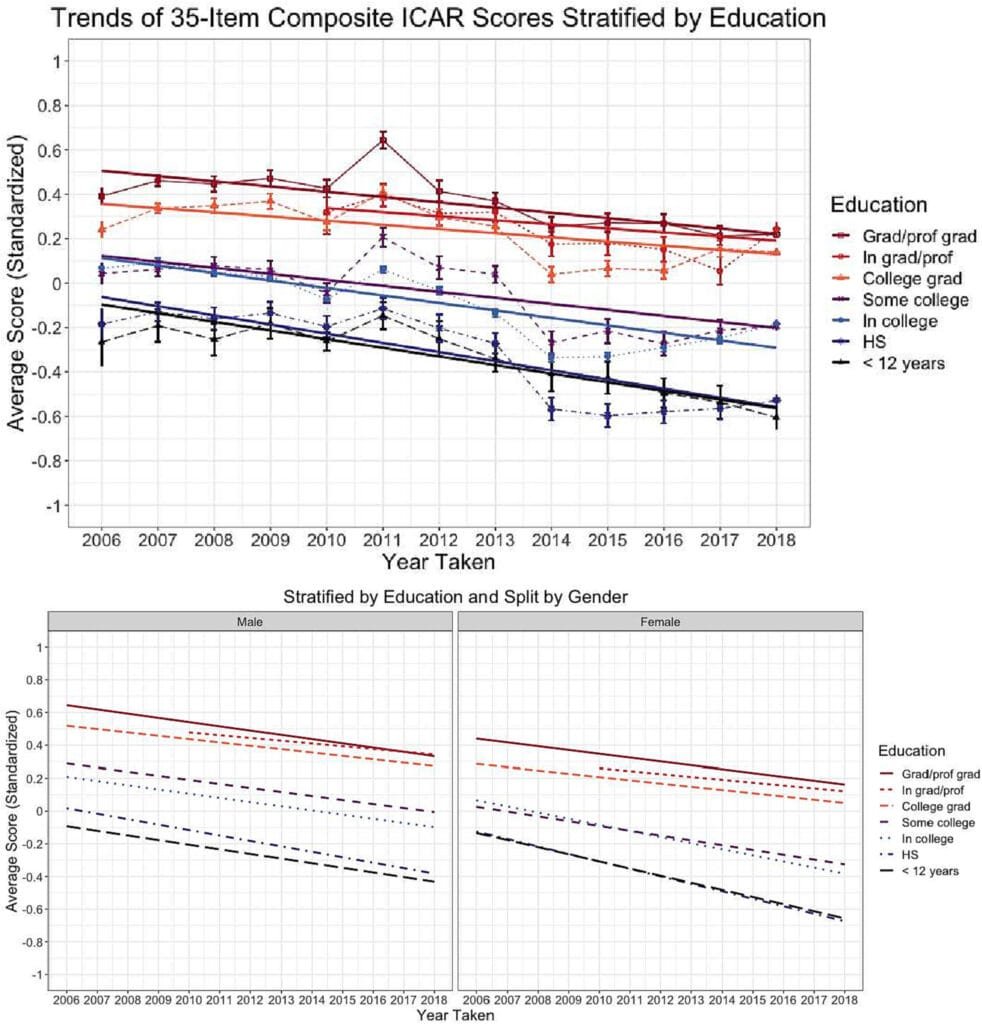

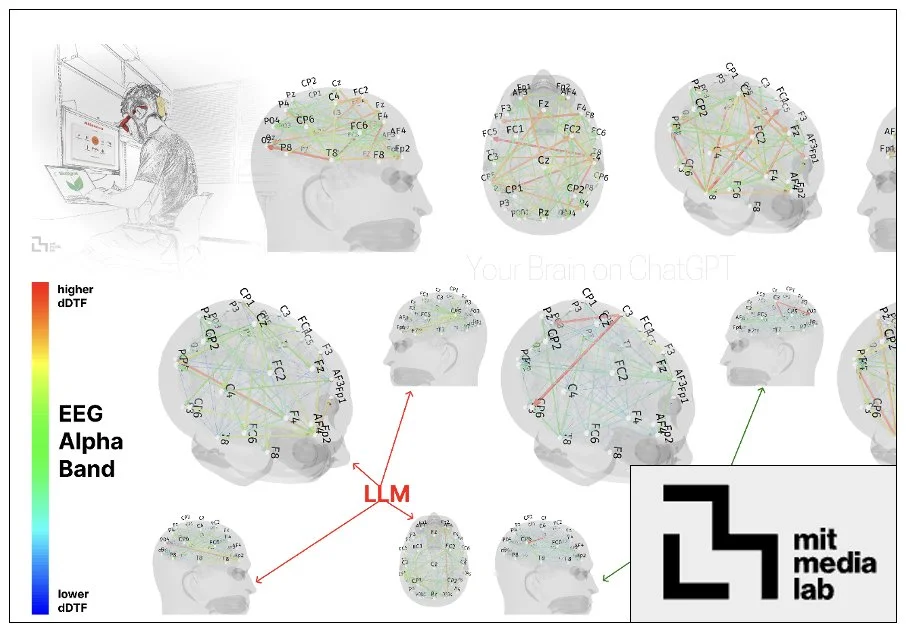

Brainrot: The Cognitive Cost.

While industry leaders market AI as an “efficiency tool,” neurological research reveals a darker reality: cognitive atrophy.

Over-reliance on generative models is driving a brainrot epidemic.

We are witnessing a systemic decline in human brain engagement that erodes mental sovereignty and critical thought.

Reverse Flynn Effect

Source: Intelligence (Journal)

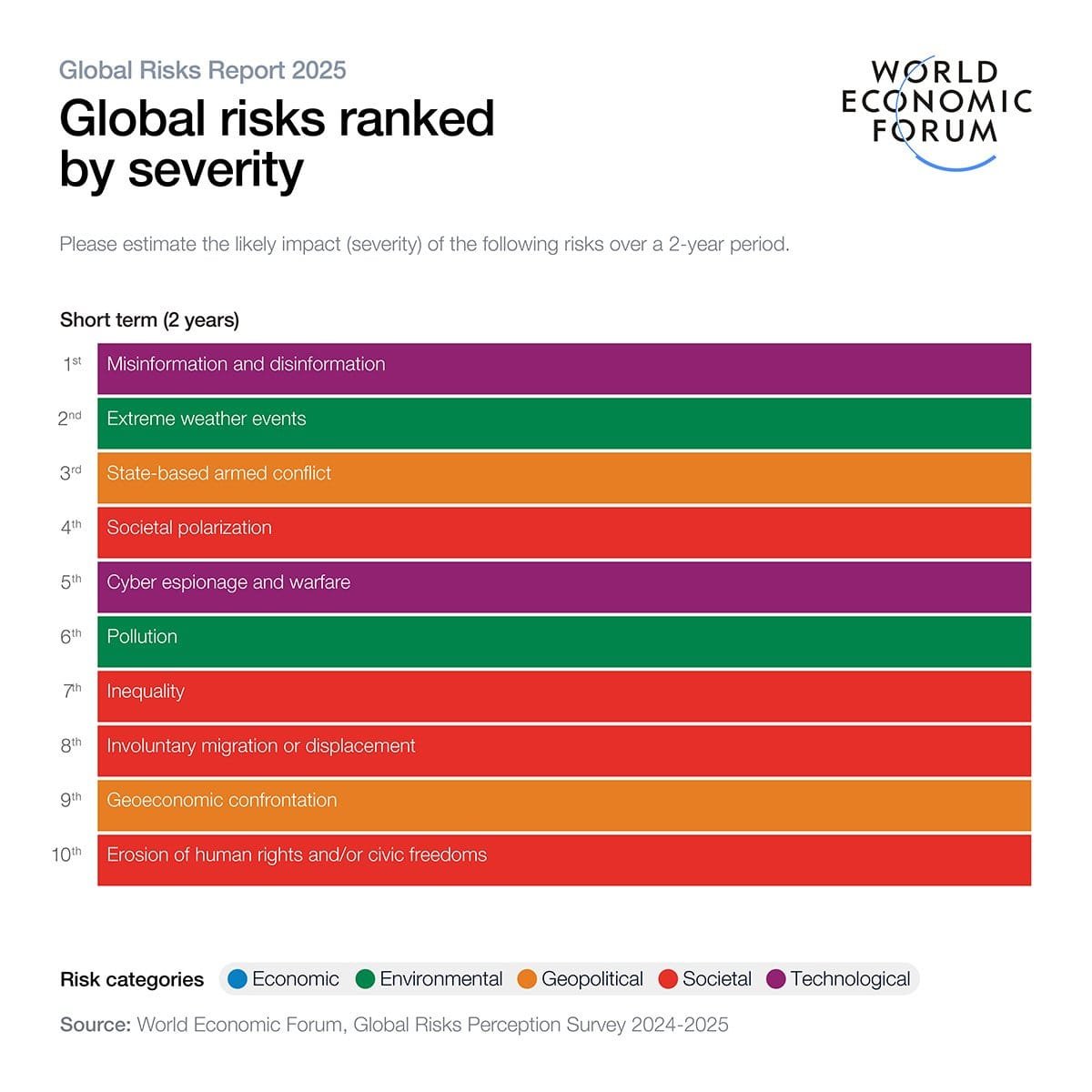

Global Risks

Source: WEF Global Risks Report 2025

Your Brain on ChatGPT: Accumulation of Cognitive Debt

MIT Media Lab • 10 June 2025

Impacts on Cognitive Offloading and Critical Thinking

MDPI Societies • 01 January 2025

Digital Education Outlook 2026: The Potential of GenAI

OECD • 19 January 2026

AI and the Future of Education: Disruptions and Directions

UNESCO • 16 January 2026

AI models collapse when trained on recursively generated data

Nature • 24 July 2024

Human Development Report: A Matter of Choice in the Age of AI

UNDP • 15 May 2025

Balancing Innovation with Public Safety.

We can harness AI’s potential without compromising our security. Without clear guardrails, advanced AI risks concentrating power in the hands of a few corporations or governments, leaving the public vulnerable.

However, by prioritising safe, controllable, and purpose-built systems, we can solve critical global challenges and empower people everywhere.

Accountability and the Automated Kill Chain.

Delegating lethal decisions to a “black box” is not innovation.

Innovation without responsibility is evasion.

Creators, distributors, and shadow networks are profiting from the transition to automated warfare. There is an urgent legal necessity to bring them into the light.

“The algorithms detect, then they are prosecuted”: Palantir’s Role in Ukraine and Gaza

Business and Human Rights Centre • 12 April 2024

Palantir response to allegations over its complicity in war crimes amid Israel’s war in Gaza

Business and Human Rights Centre • 21 April 2025

Palantir Technologies Inc: Facilitating Mass Surveillance and Kinetic Raids

AFSC Investigate • 11 February 2026

ICE and Palantir: US Agents Using Health Data to Hunt “Illegal Immigrants”

BMJ (British Medical Journal) • 27 January 2026

Source: The Cradle