AI Ethics Basics: A Comprehensive Guide for Beginners in 2024

Wow, can you believe it? A study by Gartner found that by 2024, 75% of large organizations will hire AI behaviour forensic, privacy, and customer trust specialists to reduce brand and reputation risks. That’s a mind-blowing statistic that really drives home the importance of AI ethics in today’s rapidly evolving tech landscape!

Hey there, fellow tech enthusiasts and curious minds! I’m thrilled to dive into the fascinating world of AI ethics with you today. As someone who’s been knee-deep in the AI field for years, I can’t stress enough how crucial it is for all of us to get a grip on the basics of AI ethics. Trust me, it’s not just some boring philosophical mumbo-jumbo – it’s the key to ensuring that our AI-powered future is one we actually want to live in!

In this guide, we’ll explore the fundamental principles of AI ethics, tackle some of the thorny challenges we’re facing, and even look at some real-world examples that’ll make you go “Whoa, I never thought about that!” So buckle up, because we’re about to embark on a journey that’ll change the way you think about AI forever. Ready? Let’s dive in!

What Are AI Ethics?

Alright, let’s start with the basics. What the heck are AI ethics, anyway? Well, I remember when I first stumbled upon this concept – I was like a deer in headlights, totally overwhelmed by the jargon and complexity. But don’t worry, I’ve got your back!

In simple terms, AI ethics is all about making sure that artificial intelligence systems are developed and used in ways that benefit humanity and don’t cause harm. It’s like having a moral compass for machines, if you can believe it! We’re basically teaching robots to play nice and follow the rules of human society.

The importance of AI ethics can’t be overstated. I mean, think about it – we’re creating incredibly powerful systems that can make decisions that impact our lives in major ways. Without ethical guidelines, we could end up in a sci-fi nightmare scenario faster than you can say “Skynet“!

AI ethics isn’t some new-fangled idea, either. It’s been around since the early days of AI development. I remember reading about the first discussions on machine ethics back in the 1940s and 50s, when brilliant minds like Alan Turing and Isaac Asimov were already pondering the implications of intelligent machines. Talk about being ahead of the curve!

As AI has become more advanced and integrated into our daily lives, the field of AI ethics has exploded. We’re not just talking about avoiding killer robots anymore (although that’s still a concern, believe it or not). Today, AI ethics covers everything from data privacy to job displacement to the potential for AI to perpetuate societal biases.

In my experience, grasping the basics of AI ethics is like putting on a pair of special glasses. Suddenly, you start seeing the ethical implications in every AI system you encounter – from the algorithms that recommend your next Netflix binge to the facial recognition systems used in airports. It’s both fascinating and a little scary, to be honest!

But hey, knowledge is power, right? By understanding AI ethics, we can all play a part in shaping a future where AI truly serves humanity’s best interests. And trust me, that’s a future worth fighting for!

Key Principles of AI Ethics

Okay, folks, let’s roll up our sleeves and dive into the meat and potatoes of AI ethics – the key principles that guide the development and deployment of ethical AI systems. I’ve gotta tell you, when I first started exploring these principles, it felt like trying to solve a Rubik’s cube blindfolded. But don’t worry, I’ll break it down for you in a way that won’t make your head spin!

- Transparency and explainability: This is a biggie, folks! It’s all about making sure AI systems aren’t just magical black boxes spitting out decisions. We need to be able to peek under the hood and understand how these systems are making their choices. I remember working on a project where we had to explain a complex AI decision-making process to a group of non-tech executives. Let me tell you, it was like trying to teach quantum physics to a goldfish! But that experience really drove home how crucial transparency is for building trust in AI.

- Fairness and non-discrimination: Here’s a principle that’s close to my heart. AI systems should treat all individuals and groups fairly, without discriminating based on race, gender, age, or any other protected characteristic. Sounds simple, right? Well, let me tell you, it’s anything but! I’ve seen first-hand how even well-intentioned AI can perpetuate biases if we’re not careful. It’s like trying to navigate a minefield while blindfolded – one wrong step, and boom!

- Privacy and data protection: In this day and age, data is the new oil, and protecting it is paramount. AI systems often require vast amounts of data to function effectively, but we need to ensure that this data is collected, stored, and used ethically. I learned this lesson the hard way when I accidentally left my laptop unlocked at a coffee shop once. Talk about a panic attack!

- Accountability and responsibility: Who’s responsible when an AI system makes a mistake? This principle is all about making sure there are clear lines of accountability. It’s like playing a high-stakes game of “hot potato” – someone needs to be responsible when things go wrong. Trust me, you don’t want to be the one left holding that particular potato!

- Safety and security: Last but definitely not least, we need to ensure that AI systems are safe and secure. This isn’t just about preventing Terminator-style robot uprisings (although that would be pretty cool to watch, from a safe distance). It’s about making sure AI systems can’t be hacked, manipulated, or used in ways that could harm individuals or society. I once worked on a project where we had to “stress test” an AI system for potential security vulnerabilities. Let’s just say it involved a lot of coffee, late nights, and more than a few moments of sheer panic!

These principles might seem straightforward, but let me tell you, implementing them in practice is like trying to juggle flaming torches while riding a unicycle. It takes skill, practice, and a whole lot of patience. But hey, nobody said creating ethical AI would be easy, right?

Remember, these principles aren’t set in stone. As AI technology evolves, so too will our understanding of what constitutes ethical AI. It’s a constantly moving target, which is what makes this field so darn exciting (and occasionally terrifying)!

So, the next time you interact with an AI system – whether it’s Siri, Alexa, or that creepily accurate ad targeting on social media – take a moment to think about these principles. Are they being upheld? How could they be improved? Trust me, once you start thinking this way, you’ll never look at AI the same way again!

Common Ethical Challenges in AI

Alright, buckle up, folks! We’re about to dive into the murky waters of ethical challenges in AI. Trust me, after years of working in this field, I’ve seen enough ethical dilemmas to make your head spin faster than a malfunctioning robot!

- Algorithmic bias: This is the big bad wolf of AI ethics, and let me tell you, it’s a tricky beast to tame. Algorithmic bias occurs when AI systems make unfair or discriminatory decisions based on flawed data or biased algorithms. I remember working on a recruiting AI that was supposed to help companies find the best candidates. Sounds great, right? Well, we quickly realized it was favouring male candidates for tech roles because that’s what the historical data showed. Talk about a face-palm moment! It’s like teaching a parrot to speak, only to realize it’s picked up all your bad words.

- Job displacement: Here’s a thorny issue that keeps me up at night. As AI becomes more advanced, it’s starting to take over jobs that were once done by humans. On one hand, it’s amazing to see what AI can do. On the other hand, it’s pretty scary if you’re in a job that could be automated. I’ve had some heated debates with colleagues about this – it’s like trying to solve a Rubik’s cube where every solution displaces someone’s livelihood. Not fun!

- Privacy concerns: In the age of AI, data is king. But with great data comes great responsibility (sorry, couldn’t resist the Spider-Man reference). AI systems often require massive amounts of personal data to function effectively, which raises serious privacy concerns. I once worked on a project involving smart home devices, and let me tell you, the amount of personal data these things collect is mind-boggling. It’s like having a nosy neighbour who knows everything about you, from your favourite TV shows to how often you order pizza!

- Autonomous decision-making: As AI systems become more advanced, they’re increasingly making decisions without human intervention. Sounds cool, right? Well, it’s all fun and games until an AI makes a decision with serious real-world consequences. I remember a case where an autonomous trading AI caused a “flash crash” in the stock market. It was like watching a car crash in slow motion – fascinating, but terrifying.

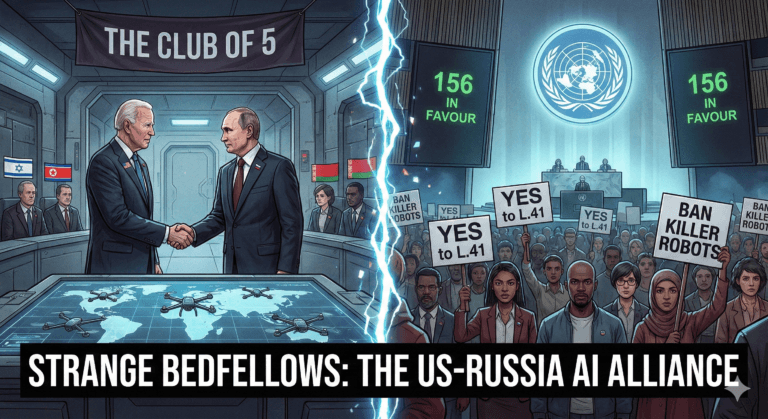

- AI in warfare and surveillance: Here’s where things get really dicey. The use of AI in military applications and mass surveillance raises some serious ethical questions. I’ve had some intense discussions with colleagues about the ethics of autonomous weapons systems. It’s like playing a high-stakes game of chess where the pieces are real people’s lives. Not exactly a fun Friday night activity, if you ask me.

These challenges keep AI ethicists like me on our toes. It’s a constant battle to stay ahead of the curve and anticipate potential ethical issues before they become real-world problems. Sometimes I feel like I’m trying to predict the weather in a world where the laws of physics change every day!

But here’s the thing – as daunting as these challenges are, they’re also what make this field so exciting. Every day brings new puzzles to solve, new ethical conundrums to untangle. It’s like being a detective in a sci-fi novel, only the stakes are very, very real.

So, the next time you hear about an AI doing something amazing (or terrifying), take a moment to consider the ethical implications. Are we creating a better world, or are we opening Pandora’s box? It’s a question we all need to grapple with as AI becomes an ever-larger part of our lives. And trust me, it’s a question that’ll keep you up at night – but in the best possible way!

Real-World Examples of AI Ethics Issues

Alright, folks, it’s story time! Grab your popcorn (or your notebook if you’re the studious type) because we’re about to dive into some real-world examples of AI ethics issues. Trust me, these cases are juicier than the latest Hollywood gossip!

- Case study 1: Facial recognition controversies

Oh boy, where do I even start with this one? Facial recognition technology has been causing quite a stir in recent years. I remember attending a tech conference where a company was showcasing their “state-of-the-art” facial recognition system. They were so proud, you’d think they’d invented sliced bread!

But here’s the kicker – when they demo’d the system, it struggled to accurately identify people with darker skin tones. Talk about an awkward moment! It was like watching a comedy of errors, except it wasn’t funny at all. This incident highlighted the serious issue of bias in facial recognition systems, which can lead to false identifications and potential discrimination.

And don’t even get me started on the privacy concerns! The idea that our faces could be scanned and identified without our knowledge or consent is straight out of a dystopian novel. I’ve had heated debates with friends about whether the convenience of unlocking our phones with our faces is worth the potential privacy trade-offs. It’s like trying to decide between a slice of delicious cake that might give you food poisoning – tempting, but risky!

- Case study 2: AI in hiring processes

Now, this is a topic close to my heart (and my wallet). Many companies are now using AI to screen job applications and even conduct initial interviews. Sounds efficient, right? Well, not so fast!

I once consulted for a company that implemented an AI hiring system. They were over the moon about how much time it was saving them. But then we discovered that the system was systematically rejecting candidates from certain universities. Why? Because historically, the company had hired more people from a select group of schools. The AI had learned this pattern and was perpetuating it.

It’s like teaching a parrot to help you choose your friends, only to realise it’s repeating your own biases back at you. This case really drove home the importance of carefully monitoring AI systems for unintended biases, especially in high-stakes decisions like hiring.

- Case study 3: Autonomous vehicles and ethical dilemmas

Okay, let’s shift gears (pun intended) to the world of self-driving cars. This is where ethics gets really tricky. Imagine this scenario: an autonomous vehicle is about to crash. It can either swerve left and hit an elderly person, or swerve right and hit a child. What should it do?

I once participated in a workshop where we grappled with these kinds of ethical dilemmas. Let me tell you, it was more intense than a game of Monopoly with my ultra-competitive family! We debated for hours, and you know what? We couldn’t reach a consensus.

This example really highlights the complexity of programming ethics into AI systems. It’s not just about following traffic rules – it’s about making split-second decisions that could have life-or-death consequences. It’s like trying to teach a computer to be Solomon – wise, fair, and able to make impossibly difficult choices.

These real-world examples show us that AI ethics isn’t just some abstract philosophical concept. It has real, tangible impacts on our lives. From potentially biased hiring decisions to life-or-death choices made by autonomous vehicles, the ethical implications of AI are all around us.

So, the next time you apply for a job online, unlock your phone with your face, or see a self-driving car on the road, take a moment to think about the ethical considerations at play. It’s like putting on a pair of AI ethics glasses – suddenly, you start seeing these issues everywhere!

And remember, as AI continues to advance, new ethical challenges will inevitably arise. It’s up to all of us – developers, users, and society at large – to stay vigilant

Implementing AI Ethics in Practice

Alright, folks, now we’re getting to the nitty-gritty. Implementing AI ethics isn’t just about talking the talk – it’s about walking the walk. And let me tell you, it’s more like trying to walk a tightrope while juggling flaming torches!

First things first – ethical AI frameworks and guidelines. These are like the Ten Commandments of AI ethics, except there are usually more than ten, and they’re constantly evolving. I remember when I first started working with these frameworks, I felt like I was trying to memorize a phone book! But trust me, they’re essential for keeping our AI systems on the straight and narrow.

One of the most crucial aspects of implementing AI ethics is having diverse teams. And I’m not just talking about diversity for diversity’s sake. I once worked on a project where we had a team that was more homogeneous than a gallon of milk. Guess what happened? We ended up creating an AI system that worked great… for people exactly like us. Since then, I’ve been a huge advocate for diverse teams. It’s like trying to solve a complex puzzle – the more different perspectives you have, the better chance you have of seeing the whole picture.

Now, let’s talk about ongoing education and training. This field moves faster than a cheetah on roller skates, I swear! What was cutting-edge last year might be obsolete today. An effort should be made to stay constantly updated. It’s like being on a never-ending treadmill of learning – exhausting, but exhilarating!

Lastly, we need to consider ethical implications right from the get-go in AI project planning. It’s not something you can just slap on at the end like a Band-Aid. I’ve seen projects go completely off the rails because ethical considerations were an afterthought. It’s like building a house without a foundation – it might look great at first, but it’s bound to collapse sooner or later.

The Future of AI Ethics

Buckle up, because we’re about to take a trip to the future! And let me tell you, it’s both exciting and terrifying – kind of like riding a roller coaster, except the track is still being built as you ride.

Emerging trends in AI ethics are popping up faster than I can keep track of. One day we’re talking about bias in facial recognition, the next we’re debating the rights of sentient AI (yeah, that’s a thing now, apparently). It’s like trying to predict the weather in a world where the laws of physics change every day!

As for potential regulatory developments, well, let’s just say lawmakers are scrambling to keep up. I’ve sat in on some regulatory discussions, and let me tell you, it’s like watching a group of cats try to herd sheep. Everyone’s moving in different directions, but we’re all trying to get to the same place – a future where AI is both innovative and ethical.

Public awareness and engagement are absolutely crucial. We can’t just leave AI ethics to the experts (trust me, we’re just as confused as everyone else half the time). I’ve been trying to spread awareness about AI ethics, and sometimes it feels like I’m shouting into the void. But then I’ll have a conversation with someone who’s genuinely interested and engaged, and it reminds me why this work is so important. It’s like planting seeds – you might not see the results right away, but eventually, they’ll grow into something beautiful.

Conclusion

Whew! We’ve covered a lot of ground, haven’t we? From the basics of AI ethics to real-world challenges and future trends, we’ve taken quite the journey. And if your head is spinning a bit, don’t worry – that’s totally normal. AI ethics is a complex, ever-evolving field, and even us “experts” are constantly learning and adapting.

But here’s the thing – as complex and challenging as AI ethics can be, it’s also incredibly important. We’re at a crucial juncture in the development of AI technology. The decisions we make now will shape the future of not just technology, but of society as a whole. It’s like we’re all co-authors of a sci-fi novel, except this story is going to become our reality.

So, I encourage you to stay informed and engaged. Read up on AI ethics, participate in discussions, and don’t be afraid to ask questions. Remember, there are no dumb questions in AI ethics – only unexplored ethical dilemmas!

And for those of you working in AI or related fields, I implore you to apply ethical considerations in everything you do. It might seem like extra work now, but trust me, it’s worth it. It’s like flossing – a bit of a pain in the short term, but it prevents a world of trouble down the line.

Lastly, I want to hear from you! What are your thoughts on AI ethics? Have you encountered any ethical dilemmas in your own experiences with AI? Drop a comment below and let’s keep this conversation going. After all, the future of AI ethics isn’t just up to the experts – it’s up to all of us.

Remember, in the world of AI, we’re not just coding software – we’re coding the future. Let’s make sure it’s an ethical one!

Want to learn more about AI?

- Check out my article on Explainable AI or Algorithmic Bias to get started.

- Check out my article on Implications of AI in Warfare and Defence.

Avi is a researcher educated at the University of Cambridge, specialising in the intersection of AI Ethics and International Law. Recognised by the United Nations for his work on autonomous systems, he translates technical complexity into actionable global policy. His research provides a strategic bridge between machine learning architecture and international governance.

3 Comments